GlobeNewsWire: Soitec announces the latest generation of SOI substrates in its Imager-SOI product line designed specifically for front-side imagers for NIR applications including 3D image sensors. The new SOI wafers are now available in large volumes with high maturity for 3D cameras used in AR and VR, facial-recognition security systems, advanced human/machine interfaces and other emerging applications.

"Our newest Imager-SOI substrates represent a major achievement for our company and a smart way to increase performance in NIR spectrum domain, accelerating new applications in the growing 3D imaging and sensing markets," said Christophe Maleville, EVP of the Digital Electronics Business Unit at Soitec. "Innovative sensor design on SOI is achieved by leveraging our advanced know-how in ultrathin material layer transfer and our extensive manufacturing experience."

The wafers are available in 300mm with BOX from 15nm to 150nm and “Epi Ready” Top silicon from 50nm to 200nm.

Thursday, November 30, 2017

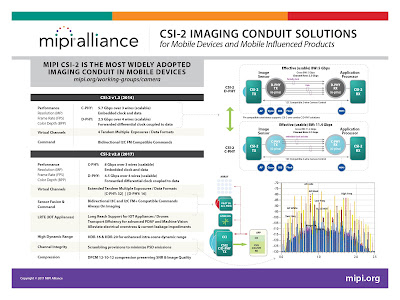

MIPI Alliance Announces Camera Command Set

MIPI Alliance releases a new specification that provides a standardized way to integrate image sensors in mobile-connected devices. The new specification, MIPI Camera Command Set v1.0 (MIPI CCS v1.0), defines a standard set of functionalities for implementing and controlling image sensors. The specification is offered for use with MIPI Camera Serial Interface 2 v2.0 (MIPI CSI-2 v2.0).

MIPI CCS makes it possible to craft a common software driver to configure the basic functionalities of any off-the-shelf image sensor that is compliant with MIPI CCS and MIPI CSI-2 v2.0. The new specification provides a complete command set that can be used to integrate basic image sensor features such as resolution, frame rate and exposure time, as well as advanced features like PDAF, single frame HDR or fast bracketing.

The CCS spec is available for a free download here.

MIPI CCS makes it possible to craft a common software driver to configure the basic functionalities of any off-the-shelf image sensor that is compliant with MIPI CCS and MIPI CSI-2 v2.0. The new specification provides a complete command set that can be used to integrate basic image sensor features such as resolution, frame rate and exposure time, as well as advanced features like PDAF, single frame HDR or fast bracketing.

The CCS spec is available for a free download here.

Wednesday, November 29, 2017

Material Based “Field-Effect CCD”

Many old ideas are recycled with a new twist - in this case the twist is the new materials. Arxiv.org paper "Two-dimensional material based "field-effect CCD" by Hongwei Guo, Wei Li, Jianhang Lv, Akeel Qadir, Ayaz Ali, Lixiang Liu, Wei Liu, Yiwei Sun, Khurram Shehzad, Bin Yu, Tawfique Hasan, and Yang Xu from Zhejiang University, China, State University of New York, USA, and University of Cambridge, UK says:

"In this work, we reported a novel detecting/imaging device concept called field-effect CCD (FECCD), which is based on CCD’s MOS photogate but requires no charge transfer between pixels (i.e. “couple”). The “couple” is re-defined as the capacitive coupling21,22 between the semiconductor substrate and the 2D material (e.g. graphene). In the semiconductor, we created the potential well for charge integration by applying a gate voltage pulse. In the 2D material, the non-destructive and direct readout along with the charge signal amplification was realized by the strong field effect.

Besides, the charge integration in our FE-CCD is beneficial for the low-light-level condition, and gives high linearity for accurate image capturing. The FE-CCD also shows a broadband response from visible to short-wavelength infrared (SWIR) wavelength, and its power consumption is readily suppressed by using the 2D-material hetero-junction."

"In this work, we reported a novel detecting/imaging device concept called field-effect CCD (FECCD), which is based on CCD’s MOS photogate but requires no charge transfer between pixels (i.e. “couple”). The “couple” is re-defined as the capacitive coupling21,22 between the semiconductor substrate and the 2D material (e.g. graphene). In the semiconductor, we created the potential well for charge integration by applying a gate voltage pulse. In the 2D material, the non-destructive and direct readout along with the charge signal amplification was realized by the strong field effect.

Besides, the charge integration in our FE-CCD is beneficial for the low-light-level condition, and gives high linearity for accurate image capturing. The FE-CCD also shows a broadband response from visible to short-wavelength infrared (SWIR) wavelength, and its power consumption is readily suppressed by using the 2D-material hetero-junction."

Velodyne Shows "Quantum Leap in LiDAR Technology"

BusinessWire: Under the leadership of visionary inventor and entrepreneur David Hall, Velodyne announces VLS-128 LiDAR sensor for autonomous vehicle market. Featuring a 128 laser channels, the VLS-128 is said to be "an extreme step forward in LiDAR vision systems, featuring the trifecta of highest resolution, longest range, and the widest surround field-of-view of any LiDAR system available today."

“The VLS-128 is the best LiDAR sensor on the planet, delivering the most advanced real-time 3D vision for safe driving,” said Mike Jellen, President, Velodyne LiDAR. “Automotive OEMs and new tech entrants in the autonomous space have been hoping and waiting for this breakthrough.”

Velodyne’s VLS-128 succeeds the HDL-64, still considered to be the industry benchmark for high resolution. Velodyne’s new flagship model has a 10 times higher resolving power than the HDL-64.

“The Velodyne VLS-128 is an all-out assault on high-performance LiDAR for autonomous vehicles. With this product, we are redefining the limits of LiDAR and we will be able to see things no one has ever been able to see with this technology,” said David Hall, Founder and CEO, Velodyne LiDAR.

Velodyne has performed customer demonstrations of the VLS-128 and plans to produce it in scale at the company’s new Megafactory in San Jose. Hall adds, “We have been demonstrating the product for the first time to customers and they can’t wait to get their hands on them. We will be shipping the VLS-128 by the end of 2017.”

“With Velodyne’s efforts in advanced robotics, my goal is to reinvent manufacturing in the United States, making the business case for US-based production that gets shipped to autonomous vehicle development projects the world over,” said Hall.

Based on mass-produced semiconductor technologies, the VLS-128 is designed for automated assembly in Velodyne’s Megafactory using a proprietary laser alignment and manufacturing system to meet the growing demand for LiDAR-based vision systems.

A Youtube video demonstrates the new LiDAR speed, resolution and range:

Update: MIT Technology Review publishes Velodyne CTO Anand Gopalan statement that VLS-128 "beams are separated by angles as small as 0.1°, with a range of 300 meters, and create as many as four million data points per second as they spin through 360 degrees."

“The VLS-128 is the best LiDAR sensor on the planet, delivering the most advanced real-time 3D vision for safe driving,” said Mike Jellen, President, Velodyne LiDAR. “Automotive OEMs and new tech entrants in the autonomous space have been hoping and waiting for this breakthrough.”

Velodyne’s VLS-128 succeeds the HDL-64, still considered to be the industry benchmark for high resolution. Velodyne’s new flagship model has a 10 times higher resolving power than the HDL-64.

“The Velodyne VLS-128 is an all-out assault on high-performance LiDAR for autonomous vehicles. With this product, we are redefining the limits of LiDAR and we will be able to see things no one has ever been able to see with this technology,” said David Hall, Founder and CEO, Velodyne LiDAR.

Velodyne has performed customer demonstrations of the VLS-128 and plans to produce it in scale at the company’s new Megafactory in San Jose. Hall adds, “We have been demonstrating the product for the first time to customers and they can’t wait to get their hands on them. We will be shipping the VLS-128 by the end of 2017.”

“With Velodyne’s efforts in advanced robotics, my goal is to reinvent manufacturing in the United States, making the business case for US-based production that gets shipped to autonomous vehicle development projects the world over,” said Hall.

Based on mass-produced semiconductor technologies, the VLS-128 is designed for automated assembly in Velodyne’s Megafactory using a proprietary laser alignment and manufacturing system to meet the growing demand for LiDAR-based vision systems.

| VLS-128 (right) succeeds the HDL-64 (left) |

|

| Comparison of VLS-128 (top) to the HDL-64 (bottom) point clouds |

A Youtube video demonstrates the new LiDAR speed, resolution and range:

Update: MIT Technology Review publishes Velodyne CTO Anand Gopalan statement that VLS-128 "beams are separated by angles as small as 0.1°, with a range of 300 meters, and create as many as four million data points per second as they spin through 360 degrees."

Tuesday, November 28, 2017

Huawei Unveils its Answer to Apple Face ID

Many sites quote German-language WinFuture showing few slides from Huawei presentation of its oncoming smartphone depth camera. The camera is said to use a "stripe projector" to create 300,000 3D points in 10s time. Huawei uses its AI hardware engine to recognize the faces in 400ms, slightly slower than fingerprint sensors in the company's phones.

Monday, November 27, 2017

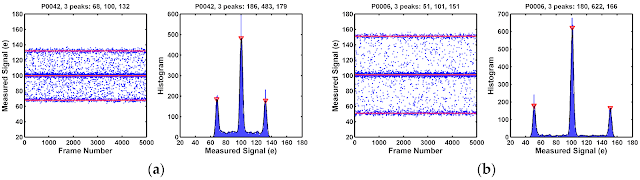

MDPI Sensors Special Issue on the 2017 International Image Sensor Workshop: TSMC Paper on RTN

MDPI Sensors has kindly agreed to publish extended versions of selected papers from 2017 IISW. The Special Issue starts from TSMC paper on RTN:

"Statistical Analysis of the Random Telegraph Noise in a 1.1 μm Pixel, 8.3 MP CMOS Image Sensor Using On-Chip Time Constant Extraction Method" by Calvin Yi-Ping Chao, Honyih Tu, Thomas Meng-Hsiu Wu, Kuo-Yu Chou, Shang-Fu Yeh, Chin Yin, and Chih-Lin Lee. The paper has a large collection of RTN measurements data and its interpretation:

"Statistical Analysis of the Random Telegraph Noise in a 1.1 μm Pixel, 8.3 MP CMOS Image Sensor Using On-Chip Time Constant Extraction Method" by Calvin Yi-Ping Chao, Honyih Tu, Thomas Meng-Hsiu Wu, Kuo-Yu Chou, Shang-Fu Yeh, Chin Yin, and Chih-Lin Lee. The paper has a large collection of RTN measurements data and its interpretation:

Face Recognition with 97% Accuracy at 10% of Apple Face ID Power

ESMChina presents Ginwatec Deep Neural Processor that implements face recognition with 97% accuracy at about 10-80x lower power than Apple Face ID. I wonder whether they find a good application for that:

Ark-Invest publishes a grossly incomplete list of Neural Processing chip companies:

Meanwhile, Alipay introduced face recognition-based "Smile to Pay" service in China:

Ark-Invest publishes a grossly incomplete list of Neural Processing chip companies:

Meanwhile, Alipay introduced face recognition-based "Smile to Pay" service in China:

Sunday, November 26, 2017

Harvest Imaging Forum Agenda

Harvest Imaging announces its 2017 Forum Agenda “Noise in Analog Devices and Circuits” by Christian Enz:

- Introduction

- Random signals and noise, Main noise sources of circuit components

- Noise models of basic components

- Noise Calculations in Circuits

- Noise calculation in continuous-time (CT) circuits

- Noise sampling

- Noise calculation in switched-capacitor (SC) circuits

- Noise simulation

- Trade-offs between Noise and Power Consumption

- The simplified EKV MOS transistor model

- The concept of inversion coefficient and the design methodology

- Basic trade-offs in analog design

- Figures-of-merit (FoMs) as design guidelines, Key FoMs parameters extraction

- Noise and Offset Reduction Techniques

- Switch nonidealities

- The Autozero (AZ) technique

- The Chopper Stabilization (CS) technique

- Recent trends in noise and offset reduction techniques

- Example of a Low-noise CMOS Imager

- CMOS image sensors (CIS)

- Noise reduction in CIS

- A sub 0.5erms noise VGA imager in standard CMOS

- Future improvements

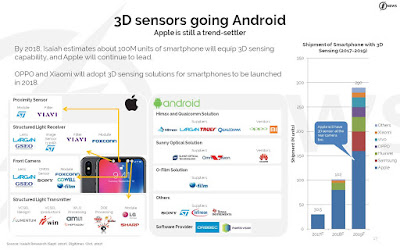

IFNews 2018 Mobile Imaging Predictions

IFNews publishes its predictions about 2018 smartphone market, including 3D cameras and AI adoption:

Saturday, November 25, 2017

ST SPAD Imager Thesis

Justin Richardson's PhD thesis "Time Resolved Single Photon Imaging in Nanometer Scale CMOS Technology," that formed a foundation of ST SPAD products, has been published by Edinburgh University.

Although the thesis is dated by May 2010, some parts of it remains to be sensitive and painted in black, especially those related to SPAD process and device modeling on pp. 84-126. In spite of this, the thesis possibly can serve as one of the best encyclopedias of SPAD devices, architectures, and circuits.

There is a lot more data and ideas in the thesis, just too much to quote everything here.

Thanks to Justin Richardson for letting me know about the publication!

Although the thesis is dated by May 2010, some parts of it remains to be sensitive and painted in black, especially those related to SPAD process and device modeling on pp. 84-126. In spite of this, the thesis possibly can serve as one of the best encyclopedias of SPAD devices, architectures, and circuits.

There is a lot more data and ideas in the thesis, just too much to quote everything here.

Thanks to Justin Richardson for letting me know about the publication!

Friday, November 24, 2017

Curved Origami Image Sensor

University of Wisconsin-Madison reports that its researchers were able to make a curved image sensor:

A flat silicon sensor "just can’t process images captured by a curved camera lens as well as the similarly curved image sensor — otherwise known as the retina — in a human eye.

Zhenqiang (Jack) Ma has devised a method for making curved digital image sensors in shapes that mimic the convex features of an insect’s compound eye and a mammal’s concave “pinhole” eye.

To create the curved photodetector, Ma and his students formed pixels by mapping repeating geometric shapes — somewhat like a soccer ball — onto a thin, flat flexible sheet of silicon called a nanomembrane, which sits on a flexible substrate. Then, they used a laser to cut away some of those pixels so the remaining silicon formed perfect, gapless seams when they placed it atop a dome shape (for a convex detector) or into a bowl shape (for a concave detector).

“We can first divide it into a hexagon and pentagon structure, and each of those can be further divided,” says Ma. “You can forever divide them, in theory, so that means the pixels can be really, really dense, and there are no empty areas. This is really scalable, and we can bend it into whatever shape we want.”

Pixel density is a boon for photographers, as a camera’s ability to take high-resolution photos is determined, in megapixels, by the amount of information its sensor can capture."

The open-access paper has been publishes in Nature Communications: "Origami silicon optoelectronics for hemispherical electronic eye systems" by Kan Zhang, Yei Hwan Jung, Solomon Mikael, Jung-Hun Seo, Munho Kim, Hongyi Mi, Han Zhou, Zhenyang Xia, Weidong Zhou, Shaoqin Gong & Zhenqiang Ma

A flat silicon sensor "just can’t process images captured by a curved camera lens as well as the similarly curved image sensor — otherwise known as the retina — in a human eye.

Zhenqiang (Jack) Ma has devised a method for making curved digital image sensors in shapes that mimic the convex features of an insect’s compound eye and a mammal’s concave “pinhole” eye.

To create the curved photodetector, Ma and his students formed pixels by mapping repeating geometric shapes — somewhat like a soccer ball — onto a thin, flat flexible sheet of silicon called a nanomembrane, which sits on a flexible substrate. Then, they used a laser to cut away some of those pixels so the remaining silicon formed perfect, gapless seams when they placed it atop a dome shape (for a convex detector) or into a bowl shape (for a concave detector).

“We can first divide it into a hexagon and pentagon structure, and each of those can be further divided,” says Ma. “You can forever divide them, in theory, so that means the pixels can be really, really dense, and there are no empty areas. This is really scalable, and we can bend it into whatever shape we want.”

Pixel density is a boon for photographers, as a camera’s ability to take high-resolution photos is determined, in megapixels, by the amount of information its sensor can capture."

| This image shows how the researchers mapped pixels onto the silicon, then cut some sections away so the resulting silicon drapes over a dome shape, with no wrinkles or gaps at the seams. |

The open-access paper has been publishes in Nature Communications: "Origami silicon optoelectronics for hemispherical electronic eye systems" by Kan Zhang, Yei Hwan Jung, Solomon Mikael, Jung-Hun Seo, Munho Kim, Hongyi Mi, Han Zhou, Zhenyang Xia, Weidong Zhou, Shaoqin Gong & Zhenqiang Ma

|

| A silicon nanomembrane-based photodiode used for the electronic eyes. An array of such photodiodes were printed and fabricated on a pre-cut flexible polyimide substrate. |

Cambridge Mechatronics Wins Company of 2017 Award, vivaMOS Wins Emerging Technology Company of the Year Award at TechWorks

The UK TechWorks names Cambridge Mechatronics the Company of the Year. The company is a world-leader and pioneer in shape memory alloys, which it uses to create single-piece motors the size of a human hair and controlled to a precision of the wavelength of light. Its technology is being designed into a huge array of products: AF and OIS for smartphones cameras and drones and devices to improve the accuracy of 3D-sensing, among many other things.

BBC’s head of technology Rory Cellan-Jones said of Cambridge Mechatronics: "The company has successfully qualified their actuators of use in one of the world’s top three smartphone brands."

The large-area X-Ray CMOS image sensors company vivaMOS wins the Emerging Company of the Year award. vivaMOS was founded in July 2015 as a technology spin-out from the CMOS image sensor group at Rutherford Appleton Laboratory (RAL) in the Science & Technology Facilities Council (STFC).

BBC’s head of technology Rory Cellan-Jones said of Cambridge Mechatronics: "The company has successfully qualified their actuators of use in one of the world’s top three smartphone brands."

The large-area X-Ray CMOS image sensors company vivaMOS wins the Emerging Company of the Year award. vivaMOS was founded in July 2015 as a technology spin-out from the CMOS image sensor group at Rutherford Appleton Laboratory (RAL) in the Science & Technology Facilities Council (STFC).

Thursday, November 23, 2017

NHK on 8K Image Sensor Development

IEEE Broadcast Symposium publishes NHK Hiroshi Shimamoto video presentation "Development of 8K UHDTV Cameras and Image Sensors:"

Wednesday, November 22, 2017

Image Sensors at ISSCC 2018

ISSCC 2018 publishes its Advance Program. There is a lot of interesting image sensor papers in the Image Sensors session:

5.1 A Back-Illuminated Global-Shutter CMOS Image Sensor with Pixel-Parallel 14b Subthreshold ADC

M. Sakakibara, K. Ogawa, S. Sakai, Y. Tochigi, K. Honda, H. Kikuchi, T. Wada, Y. Kamikubo, T. Miura, M. Nakamizo, N. Jyo, R. Hayashibara, Y. Furukawa, S. Miyata, S. Yamamoto, Y. Ota, H. Takahashi, T. Taura, Y. Oike, K. Tatani, T. Nagano, T. Ezaki, T. Hirayama,

Sony, Japan

5.2 An 8K4K-Resolution 60fps 450ke- -Saturation-Signal Organic-Photoconductive-Film Global Shutter CMOS Image Sensor with In-Pixel Noise Canceller

K. Nishimura, S. Shishido, Y. Miyake, M. Yanagida, Y. Satou, M. Shouho, H. Kanehara, R. Sakaida, Y. Sato, J. Hirase, Y. Tomekawa, Y. Abe, H. Fujinaka, Y. Matsunaga, M. Murakami, M. Harada, Y. Inoue,

Panasonic, Japan

5.3 A 1/2.8-inch 24Mpixel CMOS Image Sensor with 0.9μm Unit Pixels Separated by Full-Depth Deep-Trench Isolation

Y. Kim, W. Choi, D. Park, H. Jeoung, B. Kim, Y. Oh, S. Oh, B. Park, E. Kim, Y. Lee, T. Jung, Y. Kim, S. Yoon, S. Hong, J. Lee, S. Jung, C-R. Moon, Y. Park, D. Lee, D. Chang, Samsung Electronics, Hwaseong, Korea

5.4 A 1/4-inch 3.9Mpixel Low-Power Event-Driven Back-Illuminated Stacked CMOS Image Sensor

O. Kumagai, A. Niwa, K. Hanzawa, H. Kato, S. Futami, T. Ohyama, T. Imoto, M. Nakamizo, H. Murakami, T. Nishino, A. Bostamam, T. Iinuma, N. Kuzuya, K. Hatsukawa, B. Frederick, B. William, T. Wakano, T. Nagano, H. Wakabayashi, Y. Nitta

Sony Japan and USA

5.5 A 1.1μm-Pitch 13.5Mpixel 3D-Stacked CMOS Image Sensor Featuring 230fps Full-High Definition and 514fps High-Definition Videos by Reading 2 or 3 Rows Simultaneously Using a Column-Switching Matrix

P-S. Chou, C-H. Chang, M. M. Mhala, C-M. Liu, C-P. Chao, C-Y. Huang, H. Tu, T. Wu, S-F. Yeh, S. Takahashi, Y. Huang,

TSMC, Hsinchu, Taiwan

5.6 A 2.1μm 33Mpixel CMOS Imager with Multi-Functional 3-Stage Pipeline ADC for 480fps High Speed Mode and 120fps Low-Noise Mode

T. Yasue, K. Tomioka, R. Funatsu, T. Nakamura, T. Yamasaki, H. Shimamoto, T. Kosugi, J. Sungwook, T. Watanabe, M. Nagase, T. Kitajima, S. Aoyama, S. Kawahito

NHK Science & Technology Research Laboratories, Tokyo, Japan;

Brookman Technology, Hamamatsu, Japan;

Shizuoka University, Hamamatsu, Japan

5.7 A 20ch TDC/ADC Hybrid SoC for 240×96-Pixel 10%-Reflection less than 0.125%-Precision 200m-Range Imaging LiDAR with Smart Accumulation Technique

K. Yoshioka, H. Kubota, T. Fukushima, S. Kondo, T. T. Ta, H. Okuni, K. Watanabe, Y. Ojima, K. Kimura, S. Hosoda, Y. Oota, T. Koizumi, N. Kawabe, Y. Ishii, Y. Iwagami, S. Yagi, I. Fujisawa, N. Kano, T. Sugimoto, D. Kurose, N. Waki, Y. Higashi, T. Nakamura, Y. Nagashima, H. Ishii, A. Sai, N. Matsumoto

Toshiba, Kawasaki, Japan; 2

Toshiba Memory, Kawasaki, Japan

5.8 1Mpixel 65nm BSI 320MHz Demodulated TOF Image Sensor with 3.5μm Global Shutter Pixels and Analog Binning

C. S. Bamji, S. Mehta, B. Thompson, T. Elkhatib, S. Wurster, O. Akkaya, A. Payne, J. Godbaz, M. Fenton, V. Rajasekaran, L. Prather, S. Nagaraja, V. Mogallapu, D. Snow, R. McCauley, M. Mukadam, I. Agi, S. McCarthy, Z. Xu, T. Perry, W. Qian, V-H. Chan, P. Adepu, G. Ali, M. Ahmed, A. Mukherjee, S. Nayak, D. Gampell, S. Acharya, L. Kordus, P. O'Connor

Microsoft, Mountain View, CA

5.9 A 256×256 45/65nm 3D-Stacked SPAD-Based Direct TOF Image Sensor for LiDAR Applications with Optical Polar Modulation for up to 18.6dB Interference Suppression

A. Ronchini Ximenes, P. Padmanabhan, M-J. Lee, Y. Yamashita, D. N. Yaung, E. Charbon

Delft University of Technology, Delft, The Netherlands;

EPFL, Neuchatel, Switzerland;

TSMC, Hsinchu, Taiwan

5.10 A 32×32-Pixel Time-Resolved Single-Photon Image Sensor with 44.64μm Pitch and 19.48% Fill-Factor with On-Chip Row/Frame Skipping Features Reaching 800kHz Observation Rate for Quantum Physics Applications

L. Gasparini, M. Zarghami, H. Xu, L. Parmesan, M. Moreno Garcia, M. Unternährer, B. Bessire, A. Stefanov, D. Stoppa, M. Perenzoni,

Fondazione Bruno Kessler (FBK), Trento, Italy;

University of Bern, Bern, Switzerland;

There is also a tutorial:

T6 Single-Photon Detection in CMOS

Matteo Perenzoni, Fondazione Bruno Kessler, Trento, Italy

Every single photon carries information in position, time, etc. Single-photon devices are now demonstrated and available in several CMOS technologies, but the needed circuits and architectures are completely different from conventional visible light sensors.

This tutorial starts from the description of structure and operation of a single-photon detector, and it continues on the definition of circuits for the front-end electronics needed to efficiently manage the extracted information, addressing challenges and requirements. Then, it concludes with an overview of the different architectures that are specific for each application field, with examples in the biomedical, consumer, and space domain.

One of the forums has Sony presentation on compressive sensing:

Compressive Imaging for CMOS Image Sensors

Yusuke Oike, Sony Semiconductor Solutions, Atsugi, Japan

And F3 forum has two more presentations:

What’s the Best Technology and Architecture for your Time of Flight System?

David Stoppa, ams AG, Rueschlikon, Switzerland

Optical Phased Array LiDAR

Michael Watts, Analog Photonics, Boston, MA

5.1 A Back-Illuminated Global-Shutter CMOS Image Sensor with Pixel-Parallel 14b Subthreshold ADC

M. Sakakibara, K. Ogawa, S. Sakai, Y. Tochigi, K. Honda, H. Kikuchi, T. Wada, Y. Kamikubo, T. Miura, M. Nakamizo, N. Jyo, R. Hayashibara, Y. Furukawa, S. Miyata, S. Yamamoto, Y. Ota, H. Takahashi, T. Taura, Y. Oike, K. Tatani, T. Nagano, T. Ezaki, T. Hirayama,

Sony, Japan

5.2 An 8K4K-Resolution 60fps 450ke- -Saturation-Signal Organic-Photoconductive-Film Global Shutter CMOS Image Sensor with In-Pixel Noise Canceller

K. Nishimura, S. Shishido, Y. Miyake, M. Yanagida, Y. Satou, M. Shouho, H. Kanehara, R. Sakaida, Y. Sato, J. Hirase, Y. Tomekawa, Y. Abe, H. Fujinaka, Y. Matsunaga, M. Murakami, M. Harada, Y. Inoue,

Panasonic, Japan

5.3 A 1/2.8-inch 24Mpixel CMOS Image Sensor with 0.9μm Unit Pixels Separated by Full-Depth Deep-Trench Isolation

Y. Kim, W. Choi, D. Park, H. Jeoung, B. Kim, Y. Oh, S. Oh, B. Park, E. Kim, Y. Lee, T. Jung, Y. Kim, S. Yoon, S. Hong, J. Lee, S. Jung, C-R. Moon, Y. Park, D. Lee, D. Chang, Samsung Electronics, Hwaseong, Korea

5.4 A 1/4-inch 3.9Mpixel Low-Power Event-Driven Back-Illuminated Stacked CMOS Image Sensor

O. Kumagai, A. Niwa, K. Hanzawa, H. Kato, S. Futami, T. Ohyama, T. Imoto, M. Nakamizo, H. Murakami, T. Nishino, A. Bostamam, T. Iinuma, N. Kuzuya, K. Hatsukawa, B. Frederick, B. William, T. Wakano, T. Nagano, H. Wakabayashi, Y. Nitta

Sony Japan and USA

5.5 A 1.1μm-Pitch 13.5Mpixel 3D-Stacked CMOS Image Sensor Featuring 230fps Full-High Definition and 514fps High-Definition Videos by Reading 2 or 3 Rows Simultaneously Using a Column-Switching Matrix

P-S. Chou, C-H. Chang, M. M. Mhala, C-M. Liu, C-P. Chao, C-Y. Huang, H. Tu, T. Wu, S-F. Yeh, S. Takahashi, Y. Huang,

TSMC, Hsinchu, Taiwan

5.6 A 2.1μm 33Mpixel CMOS Imager with Multi-Functional 3-Stage Pipeline ADC for 480fps High Speed Mode and 120fps Low-Noise Mode

T. Yasue, K. Tomioka, R. Funatsu, T. Nakamura, T. Yamasaki, H. Shimamoto, T. Kosugi, J. Sungwook, T. Watanabe, M. Nagase, T. Kitajima, S. Aoyama, S. Kawahito

NHK Science & Technology Research Laboratories, Tokyo, Japan;

Brookman Technology, Hamamatsu, Japan;

Shizuoka University, Hamamatsu, Japan

5.7 A 20ch TDC/ADC Hybrid SoC for 240×96-Pixel 10%-Reflection less than 0.125%-Precision 200m-Range Imaging LiDAR with Smart Accumulation Technique

K. Yoshioka, H. Kubota, T. Fukushima, S. Kondo, T. T. Ta, H. Okuni, K. Watanabe, Y. Ojima, K. Kimura, S. Hosoda, Y. Oota, T. Koizumi, N. Kawabe, Y. Ishii, Y. Iwagami, S. Yagi, I. Fujisawa, N. Kano, T. Sugimoto, D. Kurose, N. Waki, Y. Higashi, T. Nakamura, Y. Nagashima, H. Ishii, A. Sai, N. Matsumoto

Toshiba, Kawasaki, Japan; 2

Toshiba Memory, Kawasaki, Japan

5.8 1Mpixel 65nm BSI 320MHz Demodulated TOF Image Sensor with 3.5μm Global Shutter Pixels and Analog Binning

C. S. Bamji, S. Mehta, B. Thompson, T. Elkhatib, S. Wurster, O. Akkaya, A. Payne, J. Godbaz, M. Fenton, V. Rajasekaran, L. Prather, S. Nagaraja, V. Mogallapu, D. Snow, R. McCauley, M. Mukadam, I. Agi, S. McCarthy, Z. Xu, T. Perry, W. Qian, V-H. Chan, P. Adepu, G. Ali, M. Ahmed, A. Mukherjee, S. Nayak, D. Gampell, S. Acharya, L. Kordus, P. O'Connor

Microsoft, Mountain View, CA

5.9 A 256×256 45/65nm 3D-Stacked SPAD-Based Direct TOF Image Sensor for LiDAR Applications with Optical Polar Modulation for up to 18.6dB Interference Suppression

A. Ronchini Ximenes, P. Padmanabhan, M-J. Lee, Y. Yamashita, D. N. Yaung, E. Charbon

Delft University of Technology, Delft, The Netherlands;

EPFL, Neuchatel, Switzerland;

TSMC, Hsinchu, Taiwan

5.10 A 32×32-Pixel Time-Resolved Single-Photon Image Sensor with 44.64μm Pitch and 19.48% Fill-Factor with On-Chip Row/Frame Skipping Features Reaching 800kHz Observation Rate for Quantum Physics Applications

L. Gasparini, M. Zarghami, H. Xu, L. Parmesan, M. Moreno Garcia, M. Unternährer, B. Bessire, A. Stefanov, D. Stoppa, M. Perenzoni,

Fondazione Bruno Kessler (FBK), Trento, Italy;

University of Bern, Bern, Switzerland;

There is also a tutorial:

T6 Single-Photon Detection in CMOS

Matteo Perenzoni, Fondazione Bruno Kessler, Trento, Italy

Every single photon carries information in position, time, etc. Single-photon devices are now demonstrated and available in several CMOS technologies, but the needed circuits and architectures are completely different from conventional visible light sensors.

This tutorial starts from the description of structure and operation of a single-photon detector, and it continues on the definition of circuits for the front-end electronics needed to efficiently manage the extracted information, addressing challenges and requirements. Then, it concludes with an overview of the different architectures that are specific for each application field, with examples in the biomedical, consumer, and space domain.

One of the forums has Sony presentation on compressive sensing:

Compressive Imaging for CMOS Image Sensors

Yusuke Oike, Sony Semiconductor Solutions, Atsugi, Japan

And F3 forum has two more presentations:

What’s the Best Technology and Architecture for your Time of Flight System?

David Stoppa, ams AG, Rueschlikon, Switzerland

Optical Phased Array LiDAR

Michael Watts, Analog Photonics, Boston, MA

KB Securities: High-End Smartphone Camera ASP to Rise to $60-90

BusinessKorea says that the next generation Samsung Galaxy S9 smartphone will feature a "3-stack layer" image sensor capable of 1,000 fps speed. The newspaper says it will have a dual camera on the rear. It's not clear whether the both sensors would be that fast or just one of them.

Korea-based KB Securities analyst Kim Dong-won believes that "When Samsung Electronics applies 3-stack layer laminated image sensors to smartphone cameras next year, the ASP of camera modules will double or triple to US$ 60 to US$ 90 due to the installation of super high-priced image sensors, camera module parts and design changes among others."

Korea-based KB Securities analyst Kim Dong-won believes that "When Samsung Electronics applies 3-stack layer laminated image sensors to smartphone cameras next year, the ASP of camera modules will double or triple to US$ 60 to US$ 90 due to the installation of super high-priced image sensors, camera module parts and design changes among others."

Soitec on SOI-based Imagers

EETimes' Junko Yoshida publishes an interview with Soitec CEO Paul Boudre. The company now offers SOI wafers for imaging, in addition to its more traditional applications:

"Soitec sees a growing opportunity for its Imager-SOI. Without naming any customers, Soitec listed a number of advantages of Imager-SOI. These include the ability to lower NIR illuminator power consumption, better performance through increased signal to noise ratio, and keeping cost down — because of its “lower die size” compared to bulk for the same resolution."

One should note that the first reports about Soitec plans to make on imaging-optimized wafers appeared in 2013.

EEJournal publishes an article on Soitec wafer types and their differences:

"Soitec sees a growing opportunity for its Imager-SOI. Without naming any customers, Soitec listed a number of advantages of Imager-SOI. These include the ability to lower NIR illuminator power consumption, better performance through increased signal to noise ratio, and keeping cost down — because of its “lower die size” compared to bulk for the same resolution."

One should note that the first reports about Soitec plans to make on imaging-optimized wafers appeared in 2013.

EEJournal publishes an article on Soitec wafer types and their differences:

Monday, November 20, 2017

ams Partners with Sunny Optical on 3D Camera Solutions

ams and Ningbo Sunny Opotech, a subsidiary of Sunny Optical Technology, announce a collaboration to develop and market 3D sensing camera solutions for mobile device and automotive applications to OEMs in China and the rest of the world.

Alexander Everke, CEO of ams, commented, “We are very excited about this collaboration which brings together ams’ leadership in optical sensing with Sunny Optical’s leading position in optical components and module manufacturing. Teaming up with Sunny Opotech, we are accelerating the time-to-market and availability of high quality 3D sensing solutions for smartphones and mobile devices where efficient module integration is key to enable 3D sensing for smartphone OEMs. At the same time, this collaboration allows us to pursue emerging 3D sensing opportunities in the automotive world.”

David Wang, CEO of Ningbo Sunny Opotech Co., Ltd., added, “Sunny Opotech believes that ams is the industry’s leading 3D sensing technology provider with a complete portfolio of key components and technologies enhanced by a unique patent portfolio. Combining this with Sunny Opotech’s advanced semiconductor packaging technology, optical system design, mass production abilities as well as precise active alignment and optical calibration technologies will enable us to bring Chinese OEMs and global customers optimized and comprehensive 3D sensing solutions. Sunny Opotech is very much looking forward to create substantial value for both partners through the collaboration with ams.”

Alexander Everke, CEO of ams, commented, “We are very excited about this collaboration which brings together ams’ leadership in optical sensing with Sunny Optical’s leading position in optical components and module manufacturing. Teaming up with Sunny Opotech, we are accelerating the time-to-market and availability of high quality 3D sensing solutions for smartphones and mobile devices where efficient module integration is key to enable 3D sensing for smartphone OEMs. At the same time, this collaboration allows us to pursue emerging 3D sensing opportunities in the automotive world.”

David Wang, CEO of Ningbo Sunny Opotech Co., Ltd., added, “Sunny Opotech believes that ams is the industry’s leading 3D sensing technology provider with a complete portfolio of key components and technologies enhanced by a unique patent portfolio. Combining this with Sunny Opotech’s advanced semiconductor packaging technology, optical system design, mass production abilities as well as precise active alignment and optical calibration technologies will enable us to bring Chinese OEMs and global customers optimized and comprehensive 3D sensing solutions. Sunny Opotech is very much looking forward to create substantial value for both partners through the collaboration with ams.”

Saturday, November 18, 2017

New Omnivision CEO Opens Patent War in China

Omnivision board of directors appointed a new CEO - Yu Renrong. Yu Renrong was born in 1966, has Chinese Nationality, no permanent residence abroad, Bachelor’s degree. Yu has graduated in 1990 from the Department of Radio, Tsinghua University, Beijing and has been involved into management of various companies since 1998.

Omnivision and Spreadtrum co-founder Datong (David) Chen has been appointed Chairman of the Board. The company's former CEO and Chairman Shaw Hong now becomes Chairman Emeritus and Chairman of OVT Strategic Development Committee.

IFNews, EEWorld, asmag, IPR: The company files two lawsuits against SmartSens claiming that its security-aimed SC5035 image sensor infringes on Omnivision's Chinese patent ZL200510052302.4.

According to iKnow site, the ZL200510052302.4 is actually a patent family:

Similarly to Omnivision's Nyxel announcement, StartSens too says it has improved IR sensitivity in its SC5035 sensor, but has announced this 6 months earlier, on April 18, 2017:

"SmartSens Technology's near-infrared enhancement is due to its new pixel structure. In the new structure, the electron capture region of each pixel is extended to more fully capture the electrons generated by the near-infrared band photons. This special pixel structure makes the photoelectric conversion efficiency of the near infrared band more than doubled compared with the original technology. At the same time, the new structure of the adjacent photodiode do a deep isolation, reducing the crosstalk between pixels, improve image clarity.

The 5-megapixel SC5035 is the first device in the SmartSens Technology CMOS image sensor lineup with the new technology, and its near-infrared (NIR) band is twice as susceptible to existing products. In the current security monitoring, machine vision and intelligent transportation systems and other applications, the night infrared fill light wavelengths concentrated in the 850nm ~ 940nm near infrared band. So the sensitivity of the near infrared band to enhance, can greatly enhance the product's night vision effect."

SmartSens graph shows 940nm QE of ~30% in 2um pixel, while Omnivision Nixel achieves 40% QE in 2.8um pixel:

SC5035 flyer is available on-line:

Other than SC5035, SmartSens has quite a broad lineup of sensors for security and surveillance applications:

Omnivision and Spreadtrum co-founder Datong (David) Chen has been appointed Chairman of the Board. The company's former CEO and Chairman Shaw Hong now becomes Chairman Emeritus and Chairman of OVT Strategic Development Committee.

IFNews, EEWorld, asmag, IPR: The company files two lawsuits against SmartSens claiming that its security-aimed SC5035 image sensor infringes on Omnivision's Chinese patent ZL200510052302.4.

According to iKnow site, the ZL200510052302.4 is actually a patent family:

Similarly to Omnivision's Nyxel announcement, StartSens too says it has improved IR sensitivity in its SC5035 sensor, but has announced this 6 months earlier, on April 18, 2017:

"SmartSens Technology's near-infrared enhancement is due to its new pixel structure. In the new structure, the electron capture region of each pixel is extended to more fully capture the electrons generated by the near-infrared band photons. This special pixel structure makes the photoelectric conversion efficiency of the near infrared band more than doubled compared with the original technology. At the same time, the new structure of the adjacent photodiode do a deep isolation, reducing the crosstalk between pixels, improve image clarity.

The 5-megapixel SC5035 is the first device in the SmartSens Technology CMOS image sensor lineup with the new technology, and its near-infrared (NIR) band is twice as susceptible to existing products. In the current security monitoring, machine vision and intelligent transportation systems and other applications, the night infrared fill light wavelengths concentrated in the 850nm ~ 940nm near infrared band. So the sensitivity of the near infrared band to enhance, can greatly enhance the product's night vision effect."

SmartSens graph shows 940nm QE of ~30% in 2um pixel, while Omnivision Nixel achieves 40% QE in 2.8um pixel:

SC5035 flyer is available on-line:

Other than SC5035, SmartSens has quite a broad lineup of sensors for security and surveillance applications:

Friday, November 17, 2017

SystemPlus Reveals that iPhone X IR Imager is SOI-based

EETimes publishes Junko Yoshida's article based on Yole Developpement and SystemPlus Consulting analysis of Apple iPhone X TrueDepth design. The biggest surprise is that ST IR imager is using SOI process, said to be the first such sensor in mass production:

SystemPlus and Yole "deduced that silicon-on-insulator (SOI) wafers are being used in near-infrared (NIR) imaging sensors. They noted that SOI has played a key role in improving the sensitivity of NIR sensors — developed by STMicroelectronics — to meet Apple’s stringent demands.

Pierre Cambou, activity leader for imaging and sensors at Yole Développement, called the SOI-based NIR image sensors “a very interesting milestone for SOI.”

Apple’s adoption of ST’s NIR sensors marks the debut of SOI in mass production for image sensors, noted Cambou. “Image sensors are characterized by large surface due to the physical size of light. Therefore, this is a great market to be in for a substrate supplier” like Soitec, he added.

Yole and System Plus Consulting found inside ST’s NIR sensor “the use of silicon-on-insulator (SOI) on top of deep-trench isolation (DTI).” DTI is deployed to prevent leakage between photodiodes. Apple reportedly etched literal trenches between each one, then filled the trenches with insulating material that stops electric current.

Optically speaking, Cambou explained that SOI wafers are advantageous because the insulator layer functions like a mirror. “Infrared light penetrates deeper, and it reflects back to the active layer,” he noted. Electrically speaking, Cambou noted, SOI improves NIR’s sensitivity largely because it’s good at minimizing leakage within the pixel. The improved sensitivity provides good image contrast.

Asked if ST’s NIR sensors are using FD-SOI or SOI wafers, Cambou said that the research firms couldn’t tell.

Asked about surprises unearthed by the teardown, Cambou cited the size of ST’s NIR sensor chip. It measures 25mm2, and has only 1.4 megapixels due to the large 2.8-μm pixel size.

SystemPlus and Yole "deduced that silicon-on-insulator (SOI) wafers are being used in near-infrared (NIR) imaging sensors. They noted that SOI has played a key role in improving the sensitivity of NIR sensors — developed by STMicroelectronics — to meet Apple’s stringent demands.

Pierre Cambou, activity leader for imaging and sensors at Yole Développement, called the SOI-based NIR image sensors “a very interesting milestone for SOI.”

Apple’s adoption of ST’s NIR sensors marks the debut of SOI in mass production for image sensors, noted Cambou. “Image sensors are characterized by large surface due to the physical size of light. Therefore, this is a great market to be in for a substrate supplier” like Soitec, he added.

Yole and System Plus Consulting found inside ST’s NIR sensor “the use of silicon-on-insulator (SOI) on top of deep-trench isolation (DTI).” DTI is deployed to prevent leakage between photodiodes. Apple reportedly etched literal trenches between each one, then filled the trenches with insulating material that stops electric current.

Optically speaking, Cambou explained that SOI wafers are advantageous because the insulator layer functions like a mirror. “Infrared light penetrates deeper, and it reflects back to the active layer,” he noted. Electrically speaking, Cambou noted, SOI improves NIR’s sensitivity largely because it’s good at minimizing leakage within the pixel. The improved sensitivity provides good image contrast.

Asked if ST’s NIR sensors are using FD-SOI or SOI wafers, Cambou said that the research firms couldn’t tell.

Asked about surprises unearthed by the teardown, Cambou cited the size of ST’s NIR sensor chip. It measures 25mm2, and has only 1.4 megapixels due to the large 2.8-μm pixel size.

Google Applies for RGB-Z Sensor Patent

Google patent application US20170330909 "Physical Layout and Structure of RGBZ Pixel Cell Unit For RGBZ Image Sensor" by Chung Chun Wan and Boyd Albert Fowler is a continuation from PCT filing of WO/2016/105664 in 2014. The application proposes a 5T pixel for ToF imaging intermixed with 4T or 5T RGB pixels:

Thursday, November 16, 2017

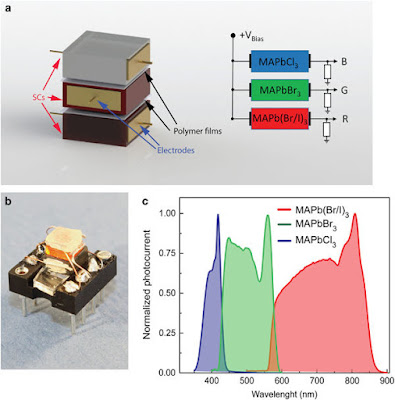

Perovskite Materials for Foveon-like Pixels

Optics.org: A group of researchers from Empa and ETH Zurich publish a paper in Nature called "Non-dissipative internal optical filtering with solution-grown perovskite single crystals for full-colour imaging" by Sergii Yakunin, Yevhen Shynkarenko, Dmitry N Dirin, Ihor Cherniukh & Maksym V Kovalenko. The crystals of semiconducting methylammonium lead halide perovskites (MAPbX3, where MA=CH3NH3+, X=Cl−, Br− and Br/I−) are used as absorbers for full-color Foveon-like stacked imaging:

Embedded Image and Vision Processing

Yole Developpement report on Embedded Image and Vision Processing says:

"The image signal processor (ISP) market offers a steady compound annual growth rate (CAGR) of 6.3%, making the total market worth $4,400M in 2017. Meanwhile, the vision processor market is exploding, with a 30.7% CAGR and a market worth $653M in 2017!

The main goal of this report is to understand what is happening with the emergence of AI. Even if it is not a new technology, thanks to technological factors AI has made a spectacular entry into vision systems.

The AI market is therefore expected to reach $35B in 2025 with an estimated CAGR at 50% per year from 2017-2025."

"The image signal processor (ISP) market offers a steady compound annual growth rate (CAGR) of 6.3%, making the total market worth $4,400M in 2017. Meanwhile, the vision processor market is exploding, with a 30.7% CAGR and a market worth $653M in 2017!

The main goal of this report is to understand what is happening with the emergence of AI. Even if it is not a new technology, thanks to technological factors AI has made a spectacular entry into vision systems.

The AI market is therefore expected to reach $35B in 2025 with an estimated CAGR at 50% per year from 2017-2025."

Subscribe to:

Posts (Atom)